Fat vs. Universal

Sounds like the MPAA looking to devour another tasty morsel compliments of our fine legislative system. But today’s topic is Virtual Machines, not virtual monopolies. By “Virtual Machine”, I don’t mean “Virtual Computer”, I mean “Virtual Processor”. There’s much to be said about the uses and shortcomings of virtual machines. I’d like to focus specifically on how the technology could, or could have, benefit Apple formerly Computer.

Apple has the privilege of designing it all: hardware, operating system and a leading share of the applications. Contrast “design” with “dictate” — it wouldn’t be practical for them to design all the chips they need, nor are they able to write software for every niche that has a need. Even their operating system, whether due to beneficence or lack of market domination, follows an admirable number of external standards. One of these is LLVM, a Virtual Machine standard already integrated into part of Leopard’s graphics system.

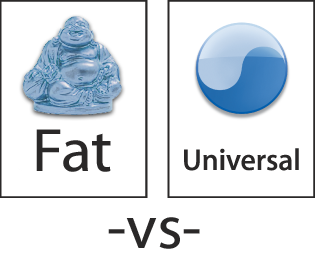

The two outside ends, hardware and applications, are the areas where Apple must take extra care in its design to give some deference to the plans of its suppliers and developers. And when it comes to processor choice, both come into play. Apple has already led a fairly smooth transition from PowerPC-compiled applications to so-called “Universal Binaries“. These programs are really just fat binaries, a technology which has been in use on the Mac for quite a while now.

“Universal” is a misnomer: the text may read one way but, as the dyadic logo implies, the reality is that most Universal Binaries are compiled for only 2 platforms, PowerPC and Intel. The next time Apple asks its developers to “check the box” and recompile could be the last for a long time if they move to an actual Universal Binary compiled to run on top of a Virtual Machine. Apple’s involvement in LLVM has been known since at least 2005, and I have no doubt they could easily pull it off. It might feel like joining the ranks of Microsoft “Why are they yelling?” .NET and Java with its “write once, ugly anywhere”, but I think it would still warrant applause at a future WWDC convention.